Real Case Study by a Crawleo User: Building a Multi-Agent LinkedIn Content System with n8n

About the Builder

This case study features work by OMI-KALIX, an independent AI engineer who shared the full implementation openly on GitHub.

Project repository: https://github.com/OMI-KALIX/Multi-Agent-AI-Workflow-for-Content-Creation

The system was built and documented in public as part of a build-in-public workflow experiment focused on reducing friction in content creation.

The Problem Was Not Writing

For the engineer behind this project, writing LinkedIn posts was never the hard part. The real issue was everything around it.

Each post required hours of context switching. Research lived in one place, drafts in another, visuals somewhere else, and final formatting happened at the very end when energy was already gone. The result was predictable. Posting became inconsistent, even though the ideas were there.

This was not a motivation problem. It was a workflow problem.

Instead of trying to write faster, the engineer stepped back and redesigned the entire process. The solution was a multi-agent AI system where each step of content creation had a clear owner.

Why a Multi-Agent System

Most content automations rely on a single large prompt and hope for the best. This project took a different approach.

The system mirrors a real team. Each agent has a single responsibility and does one thing well. When combined, the agents produce higher quality output with far less manual effort.

The result is not just automation, but orchestration. Creativity stays human. Friction disappears.

System Overview

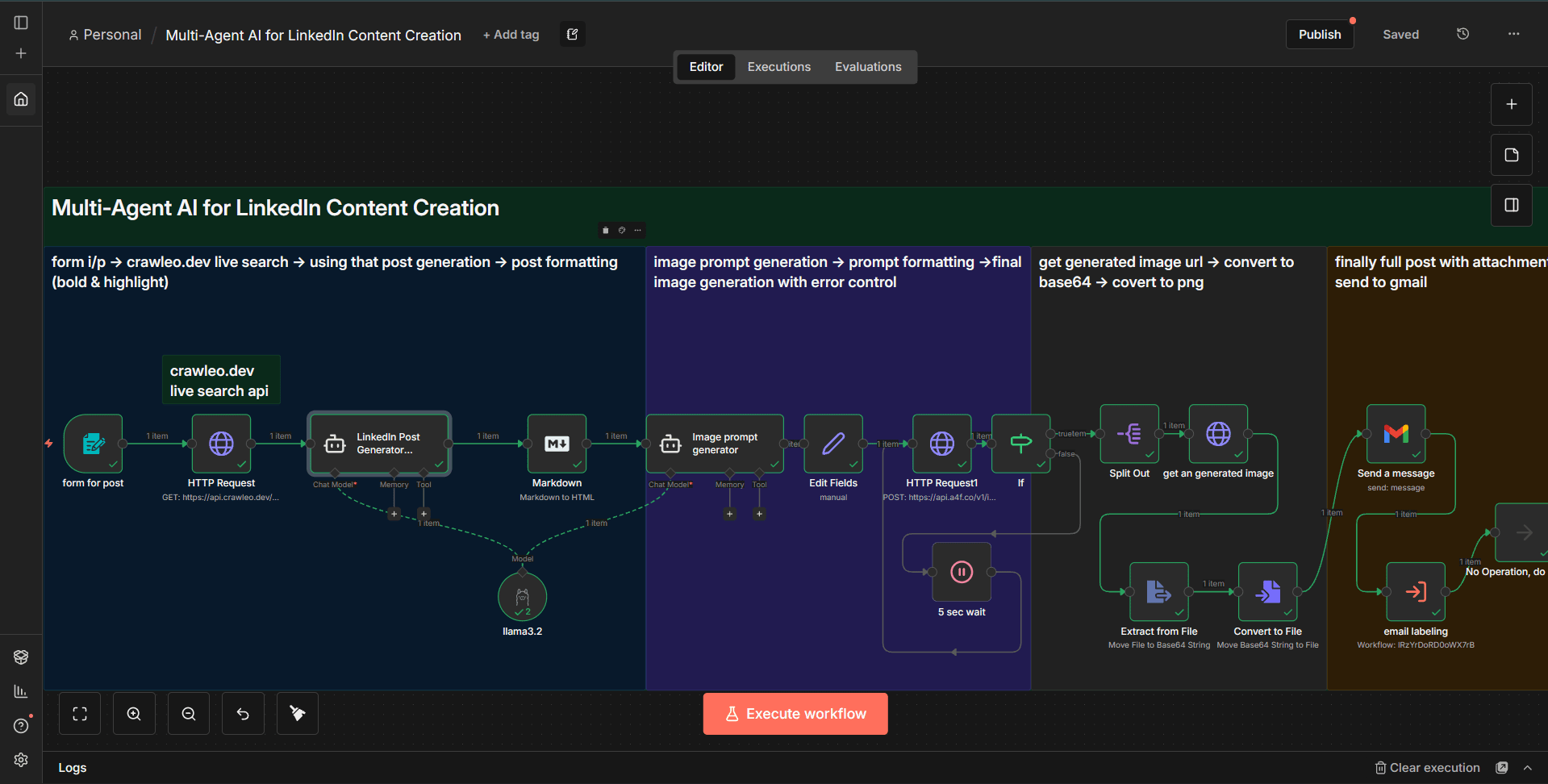

The workflow is a fully automated, end-to-end LinkedIn content system.

A single topic submission triggers the entire pipeline. Within minutes, the system delivers a ready-to-post LinkedIn update with a matching visual, sent directly by email.

At a high level, the system:

- Accepts a topic through a simple form

- Performs live web research for context

- Generates structured LinkedIn copy

- Creates a custom visual asset

- Packages and delivers the final result

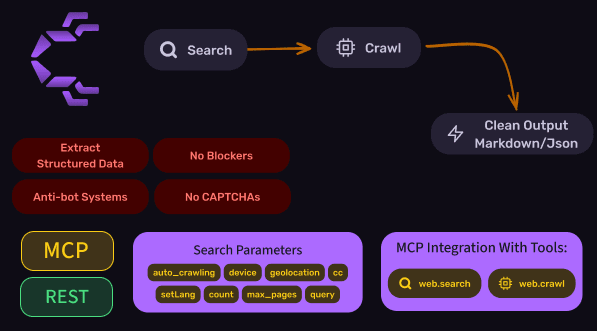

Live research is handled by entity["company","Crawleo","web crawling api company"], while orchestration and agent coordination are managed by entity["company","n8n","workflow automation platform"].

The Agents and Their Roles

Research Agent

The Research Agent is responsible for context gathering.

Using Crawleo, it performs live web searches and retrieves clean, structured text or Markdown. Ads, scripts, and noisy markup are removed before the data ever reaches a language model.

This ensures the system works with current, relevant information rather than cached or manually collected sources.

Content Agent

The Content Agent focuses only on writing.

It takes the structured research output and turns it into a LinkedIn-ready post. Tone, formatting, and structure are handled here, without any concern for sourcing or visuals.

By separating writing from research, the system avoids blank-page paralysis and inconsistent output.

Visual Agent

The Visual Agent creates a custom image that matches the post.

Image generation models such as DALL·E or Imagen are used to produce visuals aligned with the message. This removes the need for manual design work or external tools.

Delivery Agent

The Delivery Agent closes the loop.

It packages the text and image together and sends the final result via email using services like Gmail or SendGrid. What arrives in the inbox is immediately ready to post.

Why Crawleo Fits This Architecture

Crawleo plays a critical but focused role in the system.

- It provides real-time web data, not cached results

- Outputs are optimized for LLMs, reducing token waste

- No long-term storage of queries or content is required

- The HTTP-based API integrates cleanly into n8n workflows

For agent-based systems, this reliability matters. Research becomes a dependable input instead of a fragile scraping step that needs constant maintenance.

Measured Results

The impact of the new workflow is clear.

| Metric | Before | After | Impact |

|---|---|---|---|

| Time per post | 3 to 4 hours | 5 to 10 minutes | About 95 percent faster |

| Tools involved | 5 to 7 | One workflow | Major simplification |

| Posting consistency | Sporadic | Regular | Sustainable |

By removing friction, the system makes consistency possible without burnout.

Tech Stack Summary

- Orchestration: n8n, with optional support for Make or Zapier

- AI models: Local LLaMA via Ollama

- Web research: Crawleo

- Image generation: DALL·E or Imagen

- Delivery: Gmail or SendGrid

The entire system is built using visual workflow tools. No heavy custom code is required.

Lessons for Builders

This project highlights a few important ideas for AI engineers and automation builders.

- Do not automate tasks before understanding friction

- Split responsibilities across specialized agents

- Treat live web data as a first-class dependency

- Use orchestration tools to manage complexity, not prompts

The biggest improvement came from redesigning the process, not from pushing models harder.

Conclusion

This multi-agent LinkedIn content system is a strong example of practical AI automation done right. The shift was not about removing creativity, but about removing friction.

By combining Crawleo for live research with n8n for orchestration, the engineer built a repeatable, scalable workflow that turns hours of scattered work into minutes of focused output.

For anyone building AI agents, content systems, or automation pipelines, this project offers a blueprint worth studying.