Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

A common challenge in AI development is creating agents that are not limited to a static training set. To build truly useful tools for enterprise environments, developers often need to combine internal knowledge (like a company handbook) with real-time web search.

This hybrid approach allows an agent to act as a primary source for internal policies while having the web as a fallback for broader queries, all while maintaining strict control over which sources are trusted.

- Converting Web Pages to LLM-Friendly Markdown

The first step in any web-integrated pipeline is getting raw HTML into a format an LLM can understand. Tools like Dockling are highly effective for this, allowing you to convert a specific URL into clean Markdown.

By converting a page—such as the EU AI Act—into Markdown, you provide the LLM with a structured text format that is easy to summarize and interpret. This is essential for agents that need to "read" a specific page provided by a user in real-time.

- Real-Time Web Search with Domain Filtering

While searching a single page is useful, broader web search makes an agent truly dynamic. Using the OpenAI Web Search tool, developers can implement "agentic" searches that crawl the internet to find answers.

Key features of this implementation include:

• Domain Filtering: You can restrict the agent to a specific list of "allowed domains" (e.g., government websites or official documentation) to ensure high-quality, focused results.

• Reasoning Models: Using reasoning models like GPT-4.5 (mini or nano) allows the agent to decide if it needs to perform multiple searches or loops to satisfy a query.

• Citations: By using structured output (via Pydantic), the agent can return not just an answer, but a list of URLs and text snippets showing exactly where the information came from.

- Integrating Internal Knowledge (RAG)

For many clients, the core of an AI assistant is its access to internal instructions or handbooks. In a production environment, this is typically handled via a RAG (Retrieval-Augmented Generation) pipeline.

In this pattern, the handbook is treated as a tool. The agent only calls the "search handbook" function when it determines that the user's question relates to internal data rather than general knowledge or the live web.

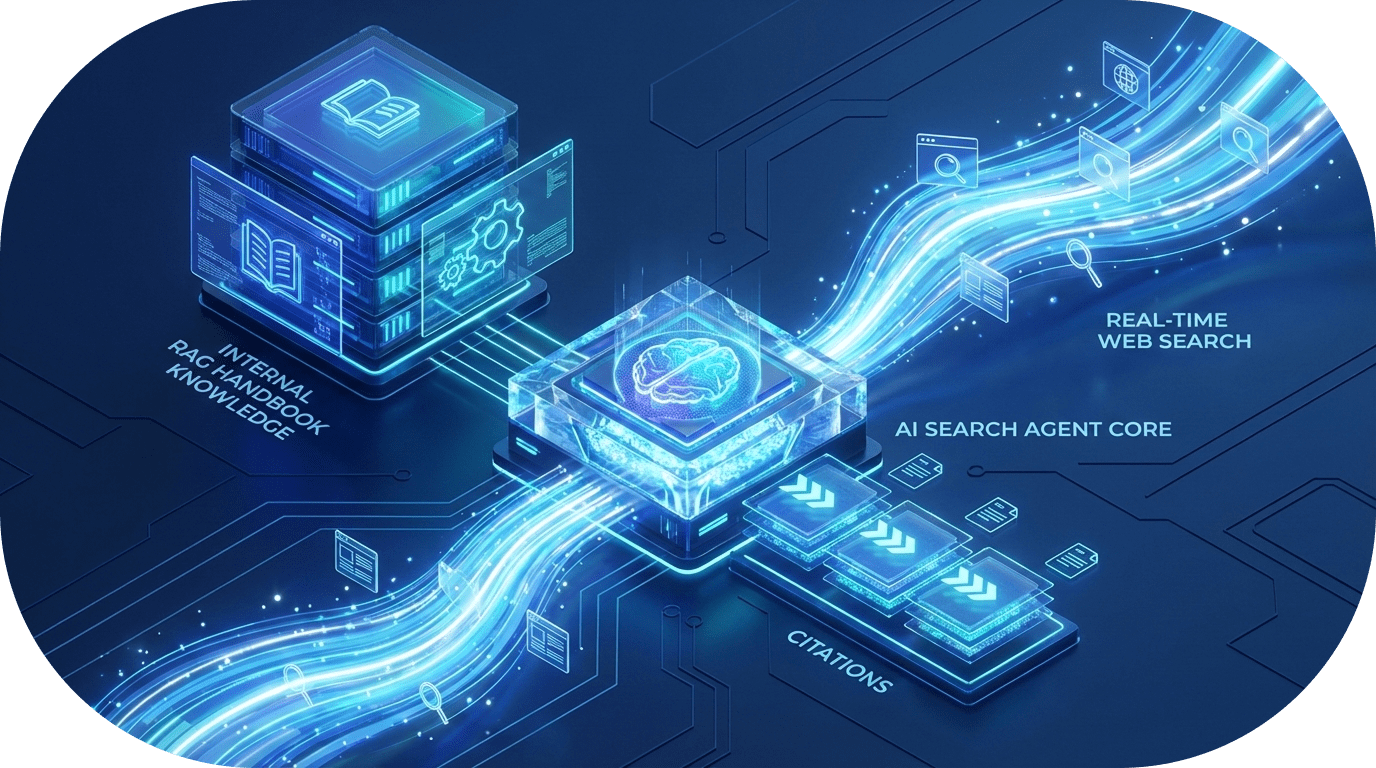

- Bringing it All Together: The Multi-Tool Agent

The true power of this system is unlocked when you combine these capabilities into a single interactive agent. By abstracting functions into a dedicated tools folder, you can create a clean, scalable architecture.

A sophisticated search agent follows this decision-making process:

-

Analyze the Query: Does the user want internal info, a specific web page, or a general search?

-

Select the Tool: The agent decides whether to call the handbook tool, the single-page scraper, or the web search tool.

-

Synthesize and Cite: The agent brings the information together from multiple sources (if necessary) and replies in a structured way with citations.

Conclusion

Combining RAG with real-time web search represents the next step in AI engineering. By using structured outputs, domain filtering, and tool-calling patterns, developers can create AI assistants that are both grounded in private data and aware of the ever-changing world.

For developers looking to implement this, focusing on modular tool structures and type-safe Pydantic models is the most efficient way to scale these "search-aware" agents

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.