How to Build LLM Training Datasets at Scale with Web Crawling

Why LLM Training Requires Web Scale Data

Modern AI companies and research labs train large language models on billions or even trillions of tokens. Public datasets alone are rarely sufficient, especially for domain specific models, multilingual systems, or continuously improving foundation models.

A single training initiative can require:

- 100,000 to 1,000,000+ web crawls

- Terabytes of raw HTML and text

- Constant refresh cycles to keep data current

This is why scalable web crawling has become a core component of AI data infrastructure.

The Role of Web Crawling in LLM Dataset Creation

Web crawling enables AI teams to:

- Discover large volumes of publicly available text

- Collect domain specific knowledge at scale

- Continuously refresh datasets with new information

- Reduce dependence on static, outdated corpora

Unlike traditional scraping, LLM focused crawling prioritizes content quality, coverage, and structure over page level precision.

Typical LLM Training Data Pipeline

A scalable LLM dataset pipeline usually follows this architecture:

-

Source Discovery

Identify high value domains, publications, forums, documentation sites, and public datasets. -

Automated Crawling at Scale

Crawl hundreds of thousands of URLs using parallel, fault tolerant crawlers. -

Content Extraction and Cleaning

Convert raw HTML into clean text or Markdown by removing:- Ads and navigation

- Scripts and styles

- Duplicate boilerplate

-

Filtering and Deduplication

Apply rules and ML filters to remove:- Low quality or spam content

- Near duplicates

- Non linguistic data

-

Normalization and Chunking

Split documents into token friendly chunks suitable for training or fine tuning. -

Storage and Versioning

Store datasets in object storage with version control for reproducibility.

Scaling Challenges AI Teams Face

Building LLM datasets at scale introduces several challenges:

- Infrastructure cost explosion from long running crawlers

- Inconsistent data quality across domains

- Legal and privacy concerns around data retention

- Operational complexity of maintaining custom scraping stacks

Many AI teams underestimate how much engineering effort is required to reliably crawl at this scale.

Best Practices for High Quality Training Data

To build elite training datasets, AI teams should follow these principles:

- Prioritize clean text over raw HTML to reduce preprocessing costs

- Use incremental crawling instead of full recrawls

- Track source provenance for auditing and dataset governance

- Avoid aggressive crawling patterns that cause IP blocks

- Continuously evaluate data quality with automated checks

Quality improvements at the data layer often outperform architectural model tweaks.

Where Crawleo Fits in Large Scale Dataset Pipelines

Crawleo is designed to support real time, large volume web crawling for AI and data engineering teams.

Key capabilities relevant to LLM dataset creation include:

- Real time web search and crawling APIs for discovering fresh content

- Multi format outputs including clean text and Markdown

- Serverless, horizontally scalable architecture for burst crawling workloads

- Zero data retention policy, supporting privacy conscious data pipelines

- LLM friendly outputs that reduce downstream preprocessing

Instead of maintaining custom crawler infrastructure, teams can integrate Crawleo directly into their dataset pipelines and focus engineering effort on data quality and modeling.

Example: Crawling at Dataset Scale

A simplified crawling workflow might look like:

from crawleo import search

results = search(

query="machine learning research papers",

max_pages=50,

auto_crawling=True,

get_page_text_markdown=True

)

for page in results.pages:

store(page.markdown)

This pattern scales horizontally when orchestrated across thousands of parallel jobs.

Continuous Dataset Refresh for Long Term Model Quality

LLM performance degrades when trained on stale data. Continuous crawling enables:

- Regular dataset updates

- Domain specific trend capture

- Faster adaptation to new terminology and events

AI teams that treat datasets as living assets gain a long term competitive advantage.

Final Thoughts

Web crawling is no longer optional for serious LLM training efforts. At the scale modern AI demands, success depends on automation, reliability, and data quality rather than one off scraping scripts.

By combining scalable crawling infrastructure with disciplined data pipelines, AI startups and research labs can continuously build and refine training datasets that power better models over time.

Tags

Related Posts

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.

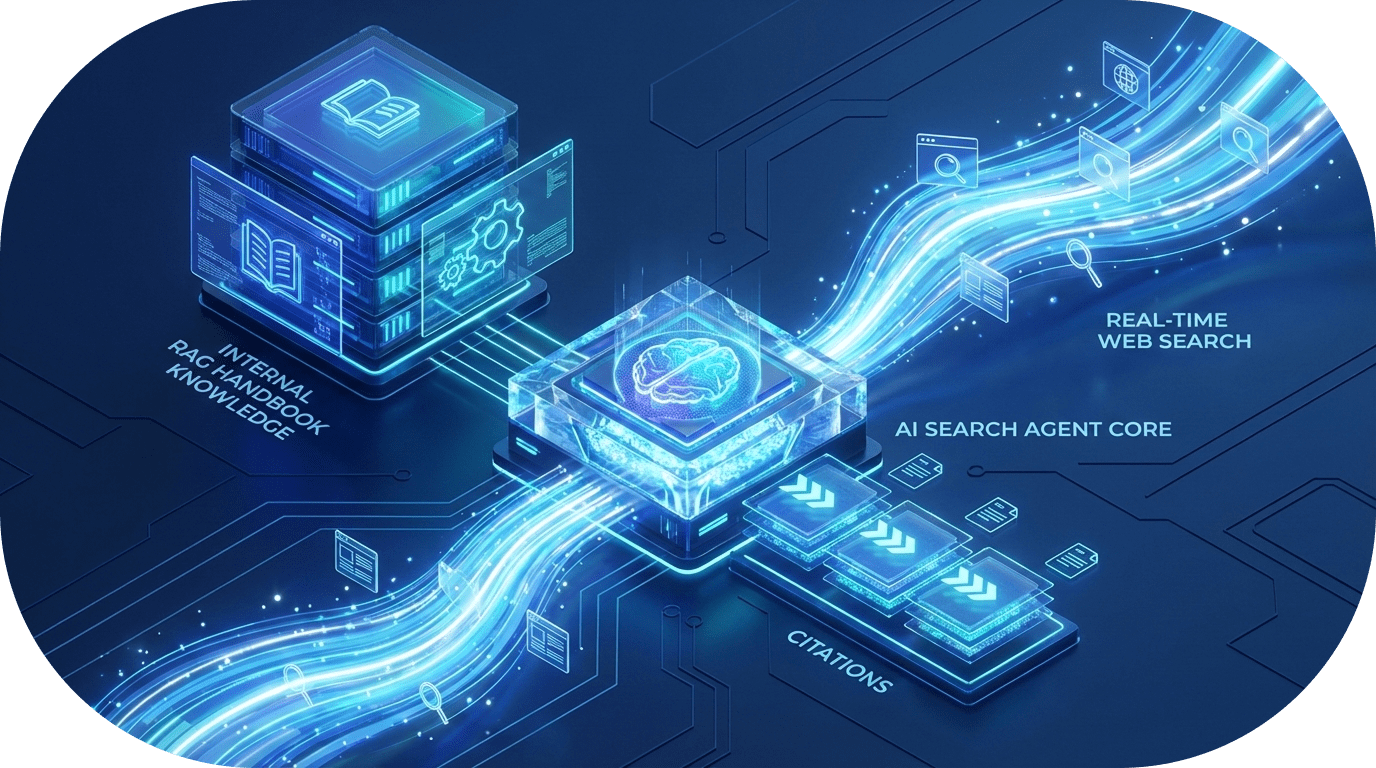

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.