Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Introduction

Web scraping is often one of the first technical solutions developers reach for when they need external data. A simple script, a few HTTP requests, and the problem looks solved. In reality, scraping at scale introduces challenges that quickly turn into engineering and operational bottlenecks.

Rate limiting, proxy management, and CAPTCHAs are not edge cases. They are core defenses of the modern web. This is why many teams eventually abandon DIY scraping in favor of managed crawling APIs like Crawleo.

Rate Limiting: The First Wall You Hit

Most websites actively control how frequently they allow requests from a single source. This is known as rate limiting.

Common symptoms include:

- HTTP 429 Too Many Requests responses

- Temporary IP bans

- Silent throttling with incomplete responses

At small scale, adding delays between requests may work. At production scale, rate limiting becomes unpredictable. Different sites enforce different thresholds, and limits can change without notice.

To work around this, teams often try:

- Distributed request scheduling

- Rotating IP addresses

- Custom retry logic and backoff strategies

Each workaround adds complexity and still does not guarantee stability.

Proxies: Necessary but Hard to Manage

Once rate limits appear, proxies become unavoidable. Using proxies introduces a new set of problems:

- Finding reliable residential or datacenter proxy providers

- Detecting and replacing burned IPs

- Handling regional and geographic restrictions

- Debugging failures caused by slow or unstable proxy nodes

Proxy costs also scale with usage. As traffic grows, so does proxy spend, often without clear visibility into which requests are actually successful.

For most teams, proxy management becomes a full time operational task rather than a side detail.

CAPTCHAs: The Breaking Point

CAPTCHAs are designed to stop automated access entirely. When scraping reaches a certain volume or pattern, CAPTCHA challenges appear:

- Image and checkbox challenges

- JavaScript based bot detection

- Behavioral analysis tied to browser fingerprints

Solving CAPTCHAs programmatically is expensive and fragile. Third party CAPTCHA solving services add latency, cost, and legal uncertainty. Headless browser automation increases infrastructure requirements and still does not guarantee success.

At this stage, DIY scraping often becomes slower and more expensive than the data it produces.

Why DIY Scraping Does Not Scale

The core issue is not technical skill. It is economics and reliability.

DIY scraping requires continuous investment in:

- Infrastructure and scaling logic

- Proxy and IP rotation systems

- Anti bot detection bypasses

- Monitoring, retries, and failure recovery

As usage grows, maintenance effort grows faster than data value. This is especially problematic for AI systems that depend on consistent, real time inputs.

How Crawleo Solves These Problems

Crawleo is built specifically to remove the operational burden of scraping and crawling.

Key advantages include:

- Built in handling of rate limiting and request pacing

- Managed proxy infrastructure with geographic targeting

- Automated mitigation of common bot detection mechanisms

- Clean, AI ready output formats like Markdown and plain text

Instead of managing scraping infrastructure, developers interact with a simple API that delivers usable data.

Designed for AI and Automation Workflows

DIY scraping often returns noisy HTML that requires heavy post processing. Crawleo focuses on outputs optimized for modern use cases such as:

- Retrieval Augmented Generation pipelines

- AI agents and autonomous research tools

- Automation workflows and no code integrations

- Knowledge ingestion for LLM based systems

This reduces token usage, preprocessing logic, and downstream failures.

When Using Crawleo Makes Sense

Crawleo is a strong fit when:

- You need real time web data at scale

- Reliability matters more than one off scripts

- Your team wants predictable costs and behavior

- Data feeds power AI or production systems

For quick experiments, DIY scraping may work. For production systems, it rarely does.

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

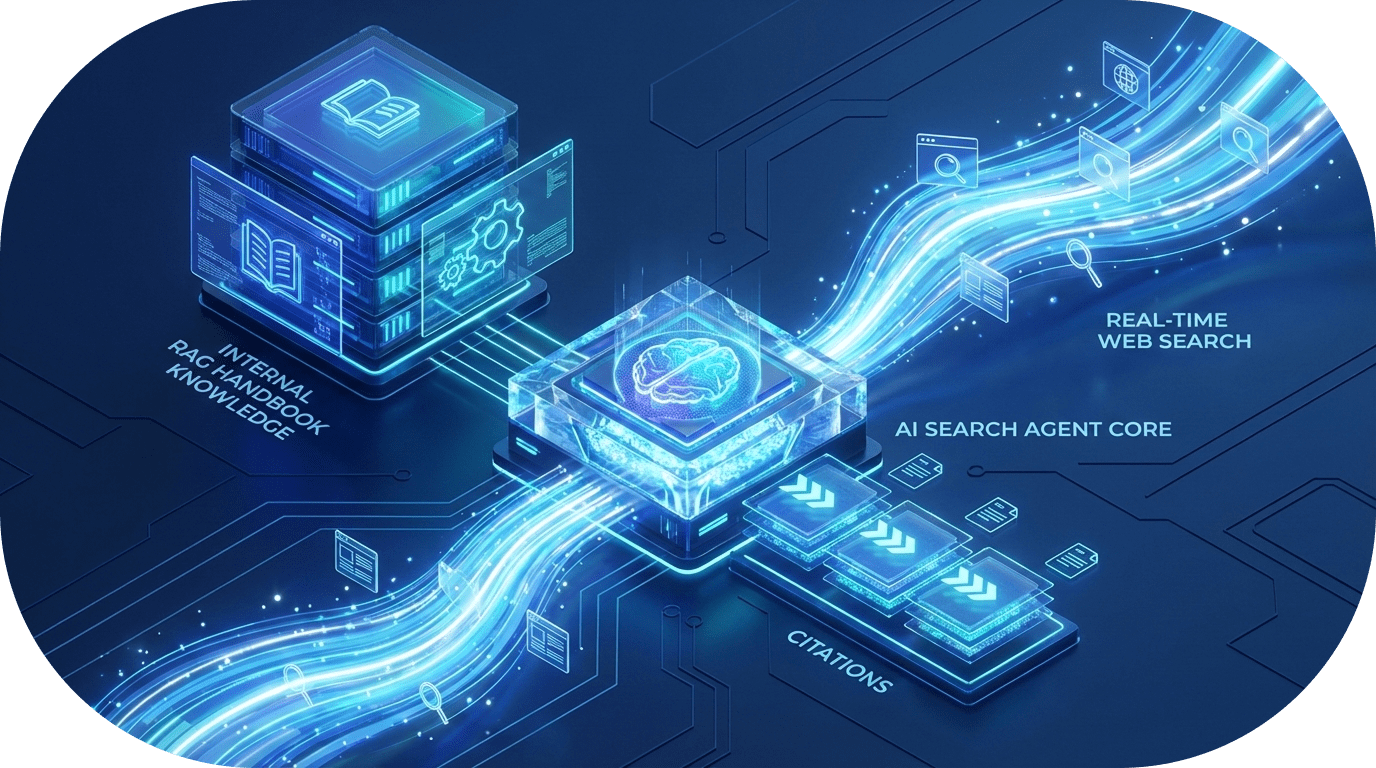

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.