Crawleo vs Exa.ai: Key Differences and Best Use Cases for AI Search & Crawling

What Is Firecrawl?

Firecrawl is a web data API built to transform websites into LLM-ready structured data via a unified API for crawling, scraping, searching, and extracting web content. It’s designed primarily for developers and AI engineers building applications that need direct access to clean online data.

Core Features

-

Crawl Entire Websites: Recursively crawl all accessible pages without needing a sitemap.

-

Scrape & Extract: Extract web content as clean Markdown, JSON, or other usable formats.

-

Handle Dynamic Content: Built-in support for JavaScript rendering and dynamic web pages.

-

Search Web Content: Integrated search and map endpoints for finding URLs and content.

-

CLI & Skill Tools: Tools that let AI agents retrieve real-time web data via skills and commands.

Typical Use Cases

Firecrawl excels when your application needs structured web data, such as:

-

Powering AI assistants with real-time context from live web pages (chatbots, context-aware agents).

-

Lead enrichment & competitor tracking from directory or business sites.

-

E-commerce data extraction for price monitoring and product info.

-

Deep research workflows where comprehensive web content matters.

Firecrawl is great for teams building AI apps that need to integrate web content directly into models, indexes, or pipelines and prefer structured API outputs over raw HTML.

What Is exa.ai?

Exa (also known as exa.ai) is an AI-optimized search engine and API, purpose-built to deliver semantic, AI-friendly search results and web content access tailored to language models. Unlike traditional search APIs that focus on click metrics, Exa is engineered to supply high-quality, relevant content to LLMs and AI workflows.

Core Capabilities

-

Neural Semantic Search: Uses transformer-based models to understand intent and meaning beyond keywords.

-

Real-Time Content Index: Delivers up-to-date links and parsed page content optimized for AI.

-

Structured Output: Returns content and highlights with URL metadata for downstream processing.

-

Rich Filtering: Filters search by domain, category, date, or other criteria.

Typical Use Cases

Exa.ai is most effective when your AI product needs AI-search-ready web knowledge, such as:

-

Retrieval-Augmented Generation (RAG): Feeding LLMs with accurate links and up-to-date context.

-

AI Research Tools: Semantic search and data retrieval for deep research assistants.

-

Large-Scale Content Retrieval: Bulk web results for dashboards, reports, or datasets.

-

Chatbot Grounding: Reducing hallucination by grounding language models in real web data.

Exa’s semantic search capabilities make it ideal for LLM pipelines, enterprise AI agents, and scalability-focused search features, where direct crawling is less critical than semantic relevance and quality of results.

Firecrawl vs exa.ai: Key Differences

| Feature | Firecrawl | exa.ai |

|---|---|---|

| Primary Focus | Web crawling & structured extraction | Semantic AI-optimized search |

| Output | Clean Markdown, JSON, extracted fields | Search results with highlights & parsed content |

| Ideal For | Crawlers feeding LLM contexts | AI search & retrieval in RAG and agent workflows |

| Dynamic Content | Full JS-rendering & scraping | Search index with up-to-date parsed pages |

| Use Cases | E-commerce data, marketing, research | High-quality search, semantic retrieval |

When to Use Firecrawl vs exa.ai

Choose Firecrawl if:

-

You need structured, machine-ready data from live websites.

-

Your AI app or agent must crawl and extract entire sites reliably.

-

You are building features like context enrichment, product scraping, or knowledge graphs.

Choose exa.ai if:

-

You need AI-optimized search with high relevance and semantic understanding.

-

Your workflow benefits more from search result ranking and content retrieval than full scraping.

-

You are building RAG models, research assistants, or AI agents that need reliable web knowledge with minimal crawling logic.

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.

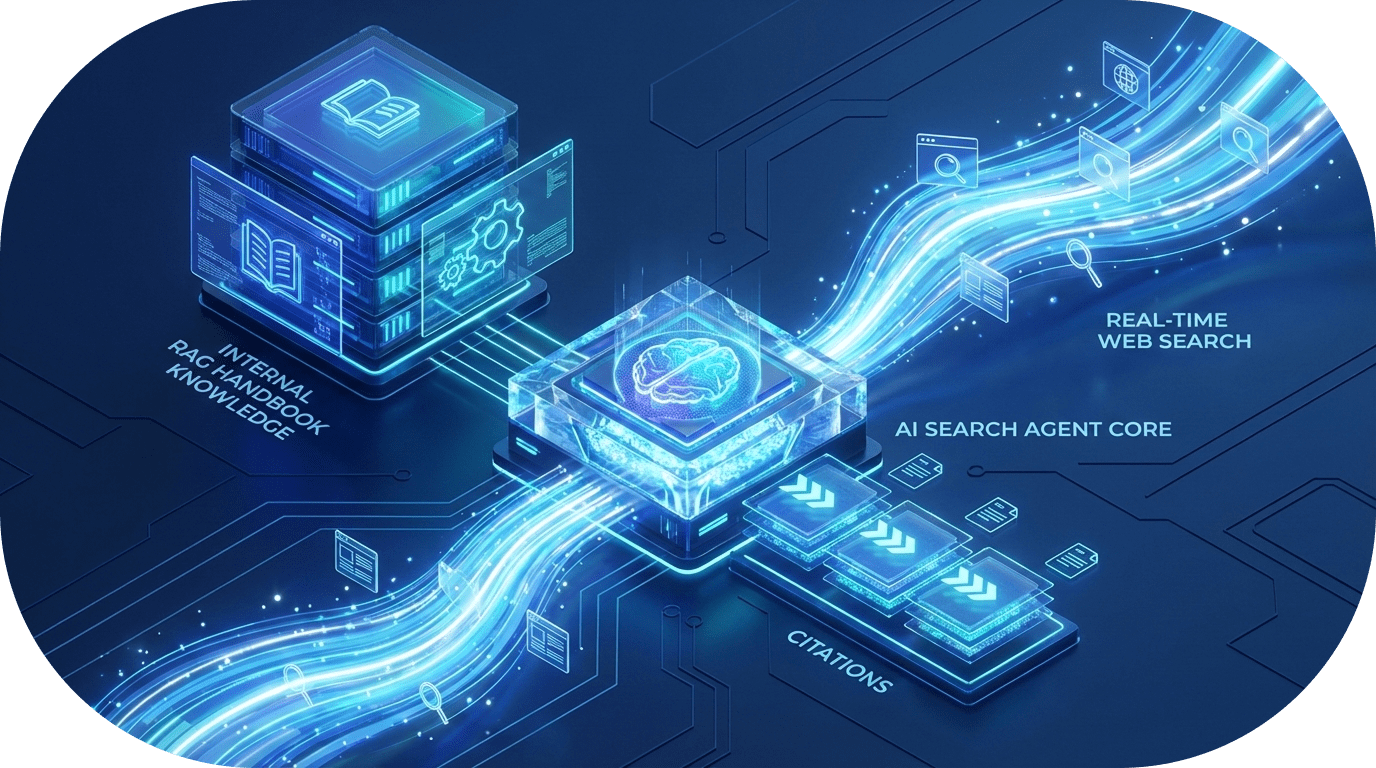

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.