Crawleo vs. OpenAI Embeddings: Do You Need Both for RAG?

Crawleo vs. OpenAI Embeddings: Do You Need Both for RAG?

In the fast-paced world of AI development, it’s easy to get confused by the sheer number of APIs available. Two names often come up when building RAG (Retrieval-Augmented Generation) pipelines: Crawleo and OpenAI Embeddings.

While they might seem like alternative solutions for "handling data," they actually serve completely different—and complementary—purposes.

-

Crawleo is a Data Acquisition tool (it fetches the data).

-

OpenAI Embeddings is a Data Representation tool (it translates data into math).

This guide breaks down their specific differences and shows how to use them together to build powerful AI applications.

1. Core Function & Purpose

Think of building a library. Crawleo is the person who goes out to buy the books (finding and fetching information). OpenAI Embeddings is the librarian who catalogs them by topic so they can be found later (organizing and understanding information).

| Feature | Crawleo.dev | OpenAI Embeddings API |

|---|---|---|

| Primary Role | Finder & Fetcher | Translator (Text-to-Vector) |

| Action | Searches the web and crawls URLs to extract text/HTML. | Converts input text into a vector (list of numbers) for machine understanding. |

| Output | Human-readable content (Markdown, JSON). | Machine-readable arrays (e.g., [0.0023, -0.015, ...]). |

| Typical Use | Getting fresh, live info for RAG pipelines. | Semantic search, clustering, and measuring text similarity. |

2. Role in an AI (RAG) Pipeline

In a modern RAG system, these two services sit at opposite ends of the "Data Ingestion" phase. You generally need both to build a chatbot that knows about current events.

Step 1: Retrieval (The "Crawleo" Step)

You cannot embed what you don't have. If you want your AI to answer questions about "The 2026 Winter Olympics," you first need to get that information.

-

You send a query: "2026 Winter Olympics news" to Crawleo.

-

Crawleo actions: It browses the live web, bypasses CAPTCHAs, renders the JavaScript, and returns the clean text content of relevant articles.

Step 2: Embedding (The "OpenAI" Step)

Now that you have the text, you need to store it in a way that allows your AI to search it efficiently.

-

You send the text: The article text returned by Crawleo is sent to OpenAI Embeddings.

-

OpenAI actions: It converts that text into a Vector (a long list of numbers representing the meaning of the text).

-

Storage: You save this vector in a database (like Pinecone or Milvus).

3. Data Privacy & Freshness

Crawleo: The "Now"

Crawleo emphasizes real-time access. It fetches data live from the open web. It doesn't rely on a stale database.

- Privacy: Crawleo operates with a "zero data retention" policy. It fetches the web page you asked for, hands it to you, and forgets the interaction immediately.

OpenAI Embeddings: The "Knowledge"

OpenAI Embeddings processes text you already possess. It uses the static weights of the model to understand the semantic relationship between words.

- Limitation: The embedding model itself doesn't "know" about breaking news. It only knows how to measure the text you feed it. If you feed it old data, you get old embeddings.

4. Integration & Inputs

-

Crawleo Input: URLs (for deep crawling) or Search Queries (for finding pages). It handles the messy work of the internet: proxies, browser fingerprinting, and dynamic content rendering.

-

OpenAI Input: Raw Text Strings. It requires you to clean and chunk the text before sending it. It has strict token limits (e.g., 8,191 tokens for

text-embedding-3-small), meaning you can't just throw a whole raw HTML page at it—you need Crawleo to clean it first.

Summary

Do not choose between them; choose how to combine them.

-

Use Crawleo when you need to find information outside your database (the live internet).

-

Use OpenAI Embeddings when you need to organize or compare that information mathematically for your AI to retrieve later.

Building a RAG pipeline? Start by getting the data you need with Crawleo.dev.

Tags

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.

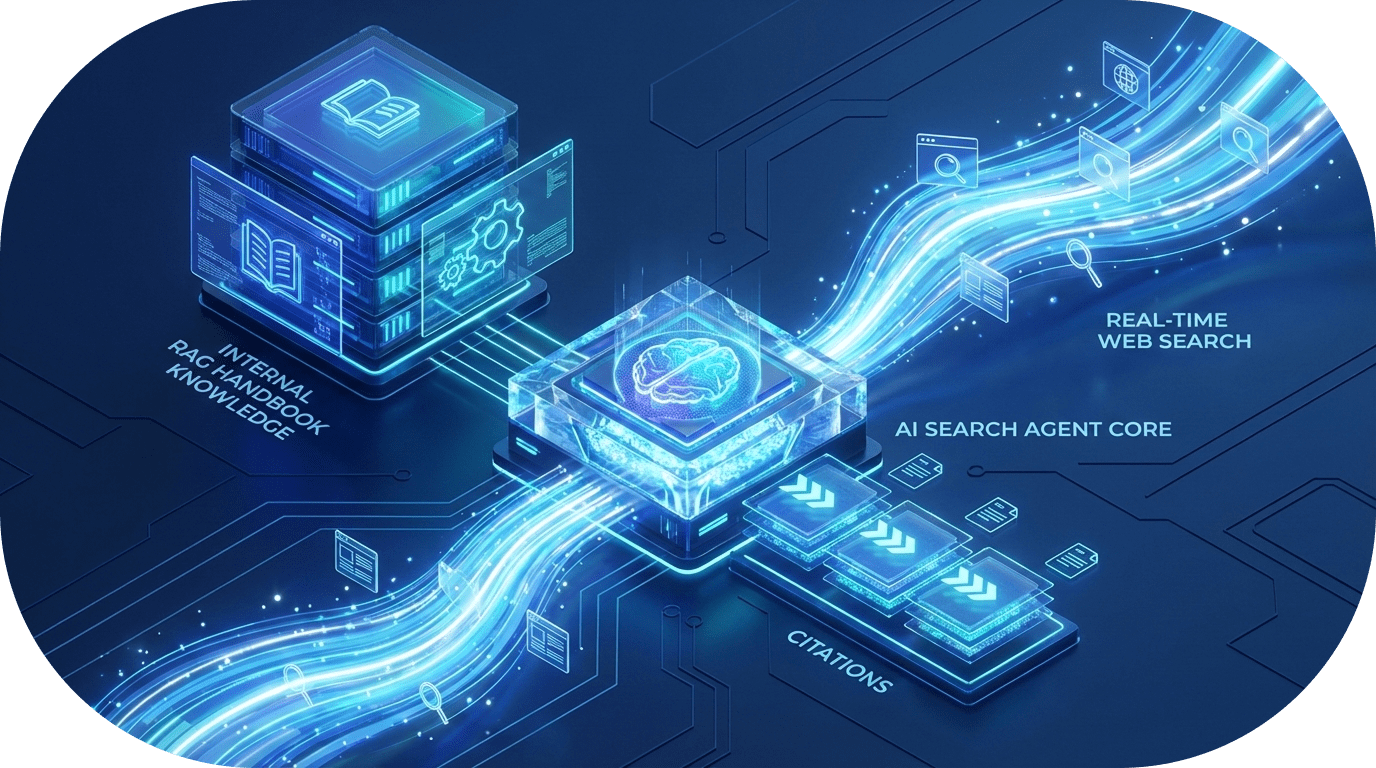

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.