Firecrawl vs Crawleo: Web Crawling, Scraping, and AI Data Tools Compared

Introduction

When building AI applications, agents, or large-scale data workflows, you often need fresh web data. Two tools commonly discussed by developers are Firecrawl and Crawleo. Although they share some capabilities, they serve different primary purposes and workflows. This guide explains their differences, strengths, and ideal use cases.

What Is Firecrawl?

Firecrawl is a web crawling and scraping API designed to turn websites into structured, AI-ready data. It handles dynamic sites, JavaScript content, and complex link structures without requiring sitemaps.

Key Capabilities:

- Crawling: Traverses all accessible pages and subpages to collect content.

- Scraping: Extracts and formats content into clean Markdown, HTML, or JSON.

- Dynamic Support: Handles JavaScript-rendered content and anti-bot mechanisms.

- Developer SDKs: Support for Python, Node.js, CLI, etc.

When to Use Firecrawl:

- You need to crawl full site structures and gather large content sets.

- You want well-formatted Markdown or structured data for RAG applications.

- Your project involves extensive research, content aggregation, or training data pipelines.

Firecrawl’s design makes it particularly suited for large crawling jobs, content mining, and crawling workflows where comprehensive site traversal and extracted outputs are needed.

What Is Crawleo?

Crawleo is a privacy-focused, real-time web search and crawling API built for AI assistants, agents, and LLM pipelines. It emphasizes live data access rather than batch scraping.

Core Features:

- Search API: Query the live web and get current results back.

- Crawler API: Crawl specific URLs with JS rendering support.

- MCP Integration: Native Model Context Protocol server support for AI assistants.

- LLM-Ready Outputs: Formats optimized for minimal token usage.

- Privacy-First: Zero data retention and strict privacy guarantees.

When to Use Crawleo:

- You need real-time search results integrated into AI agents.

- You want a lightweight crawler that can be called on demand from AI chat interfaces.

- Privacy and no data storage policies are critical.

- You’re building RAG apps or assistant tools that require live web context.

Crawleo excels at on-demand retrieval and search-oriented workflows, especially where freshness and privacy are priorities.

Key Differences

| Feature Category | Firecrawl | Crawleo |

|---|---|---|

| Primary Focus | Deep crawling and structured web data extraction | Real-time web search and on-demand crawling |

| Output Formats | Markdown, JSON, HTML | Markdown, plain text, HTML |

| Best For | Large crawl jobs, content mining workflows | AI assistants, live search, RAG pipelines |

| Integration | APIs + SDKs | REST API + MCP server support |

| Privacy | Standard API privacy | Zero data retention focus |

| JavaScript Support | Yes | Yes |

| Use Case Examples | Market research data, website analysis | Ask AI for current web facts, competitor search |

Use Case Scenarios

Ideal Firecrawl Use Cases

- Market & Competitor Analysis: Crawl competitor sites to gather pricing, product, and content data.

- Data Aggregation: Collect content from thousands of pages into structured feeds.

- Training Data Pipelines: Generate Markdown datasets for LLM training or indexing.

Ideal Crawleo Use Cases

- AI Assistant Web Search: Provide up-to-date answers inside chat tools.

- Live Research Tools: Integrate with RAG for news, market updates, or product discovery.

- Agent Workflows: Use MCP tools inside AI assistant environments like Claude or Cursor.

Choosing Between Them

Choose Firecrawl if your goal is deep, comprehensive crawling and scraping with flexible output formats and large-scale data collection. Choose Crawleo if you want real-time search integration, low latency responses, and privacy-focused web access for agents and AI apps.

Both tools play important roles in modern AI stacks, but your project needs will determine which one is the right fit.

Conclusion

Firecrawl and Crawleo are powerful but serve distinct purposes. Understanding their differences helps you align your tool choice with your project goals, whether that’s deep crawling and structured data or live search and AI-ready results.

If you want, I can also create a diagram or code comparison showing how to call each API for crawling or searching.

Tags

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.

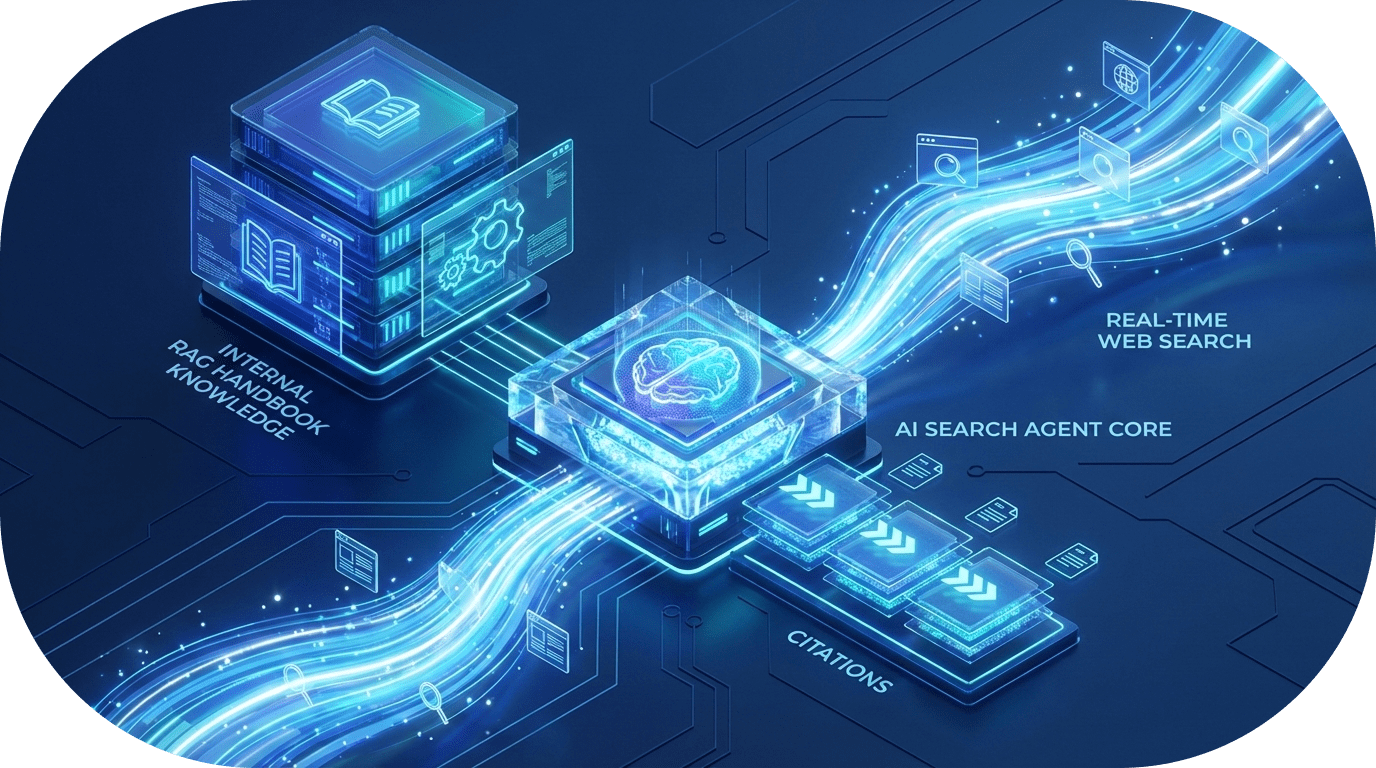

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.