How to Add a Custom MCP Server to IntelliJ ai assistant for Live Web Search

Step 1: Open MCP Settings

Navigate to your assistant settings using the following path:

Settings > Tools > AI Assistant > Model Context Protocol (MCP)

This section manages all MCP servers connected to your assistant.

Step 2: Add a New MCP Server

In the MCP window:

-

Click the Add icon in the top-left corner.

-

Paste the following configuration, replacing

YourAPIKeywith your actual API key.

{

"mcpServers": {

"crawleo": {

"url": "https://api.crawleo.dev/mcp",

"transport": "http",

"headers": {

"Authorization": "Bearer sk_fcd04ad5_cc17908db909bc6df0c40bdf1dfdf9a4959b06da6795a17e7c58b06626872c24"

}

}

}

}

- Click OK to save the configuration.

Step 3: Apply and Start the MCP Server

After saving, you will see the server listed with a Not Started state.

To activate it:

-

Click Apply in the settings window.

-

Wait for the assistant to reload the configuration.

Once reloaded, the server state should change to Started, confirming that the MCP server is active and ready to use.

Step 4: Use MCP Tools in Chat

With the MCP server running, you can now call its tools directly from the chat interface.

Available commands include:

-

/crawl_webfor crawling specific URLs -

/search_webfor live web search queries

These commands allow your AI assistant to retrieve real-time web content instead of relying on static or cached knowledge.

Common Troubleshooting Tips

-

If the server remains in Not Started, double-check your API key and click Apply again.

-

Ensure your network allows outbound HTTPS connections.

-

Restart your AI assistant or IDE if the state does not update.

Why Use MCP for Web Search

Using MCP servers provides several benefits:

-

Standardized tool integration across AI platforms

-

Secure API-based access with scoped permissions

-

Live, up-to-date web data for AI workflows

-

No custom plugin or extension code required

This makes MCP ideal for developers building AI agents, RAG pipelines, or automation workflows that depend on fresh web information.

Final Notes

Once configured, MCP servers run seamlessly in the background and extend your AI assistant with powerful external capabilities. You can add multiple MCP servers to combine different tools and services in a single chat interface.

For advanced use cases, consider chaining MCP tools inside automated agent workflows or IDE-based assistants.

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.

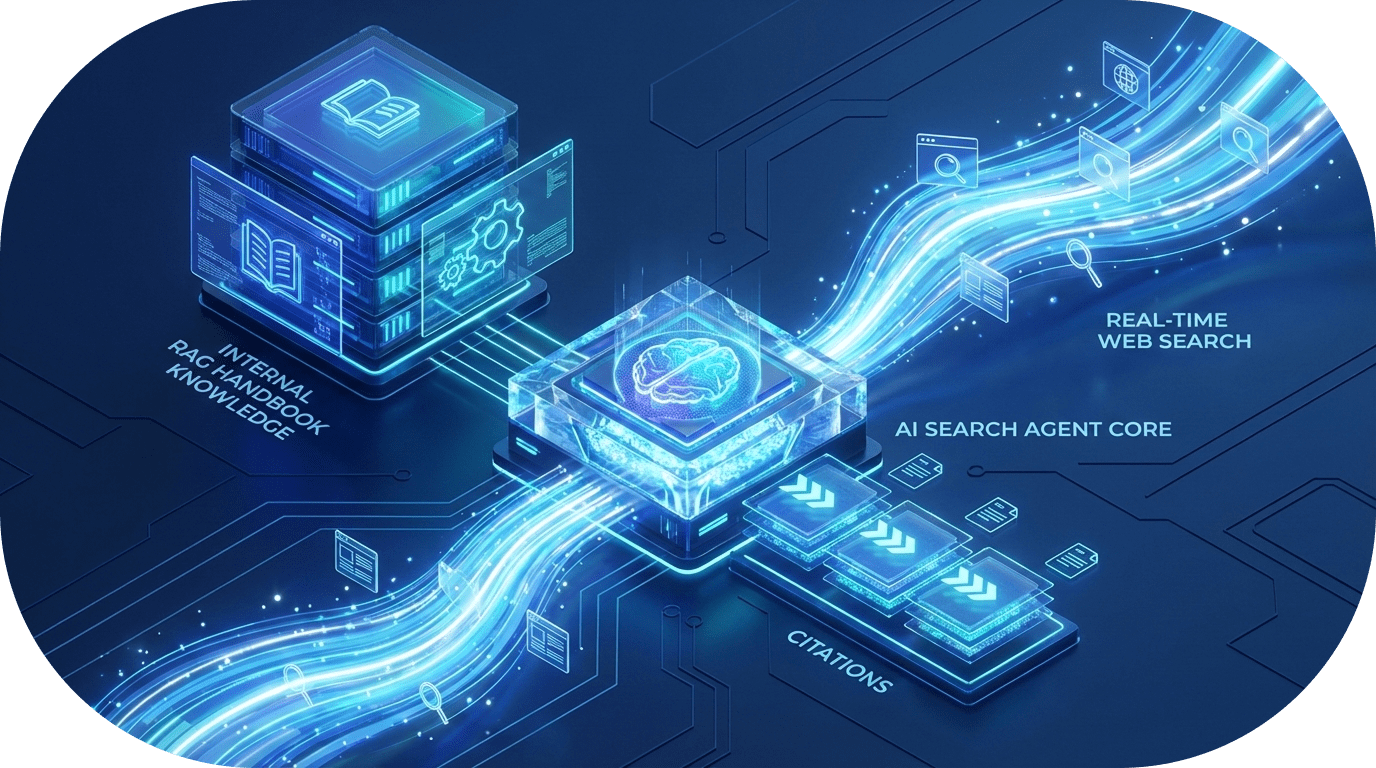

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.