Make Antigravity crawl the web without getting blocked in real-time for free

1. Open MCP Server Settings

From the Agent Panel in Antigravity IDE:

- Click Additional options (the three dots in the top‑right corner)

- Click MCP Servers

- Click Manage MCP Servers from the top right

A new tab called Manage MCPs will appear in the editor.

2. Open Raw MCP Configuration

Inside the Manage MCPs tab:

- Click View raw config

This opens the raw MCP JSON configuration file.

3. Add Crawleo MCP Configuration

Paste the following JSON into the configuration file:

{

"mcpServers": {

"crawleo": {

"serverUrl": "https://api.crawleo.dev/mcp",

"transport": "http",

"headers": {

"Authorization": "Bearer API_KEY"

}

}

}

}

Replace API_KEY with your actual Crawleo API key.

Example:

"Authorization": "Bearer ck_live_xxxxxxxxxxxxxxxxx"

Save the file after editing.

4. Refresh MCP Servers

-

Go back to the Manage MCPs tab

-

Click Refresh

If the configuration is valid, Antigravity IDE will register the Crawleo MCP server.

What You Get After Setup

After completing this setup, Antigravity IDE will expose two Crawleo MCP tools:

search_webfor real‑time web searchcrawl_webfor direct page crawling

Troubleshooting

Error: Unauthorized or Stream Failure

If you see the following error:

Error: streamable http connection failed: calling "initialize": sending "initialize": Unauthorized, sse fallback failed: missing endpoint: first event is "", want "endpoint".

Cause:

- An invalid or incorrect Crawleo API key was used

Fix:

-

Double‑check the API key

-

Ensure there are no extra spaces or missing characters

-

Confirm the key is active in your Crawleo dashboard

After correcting the key, refresh MCP servers again.

Tags

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.

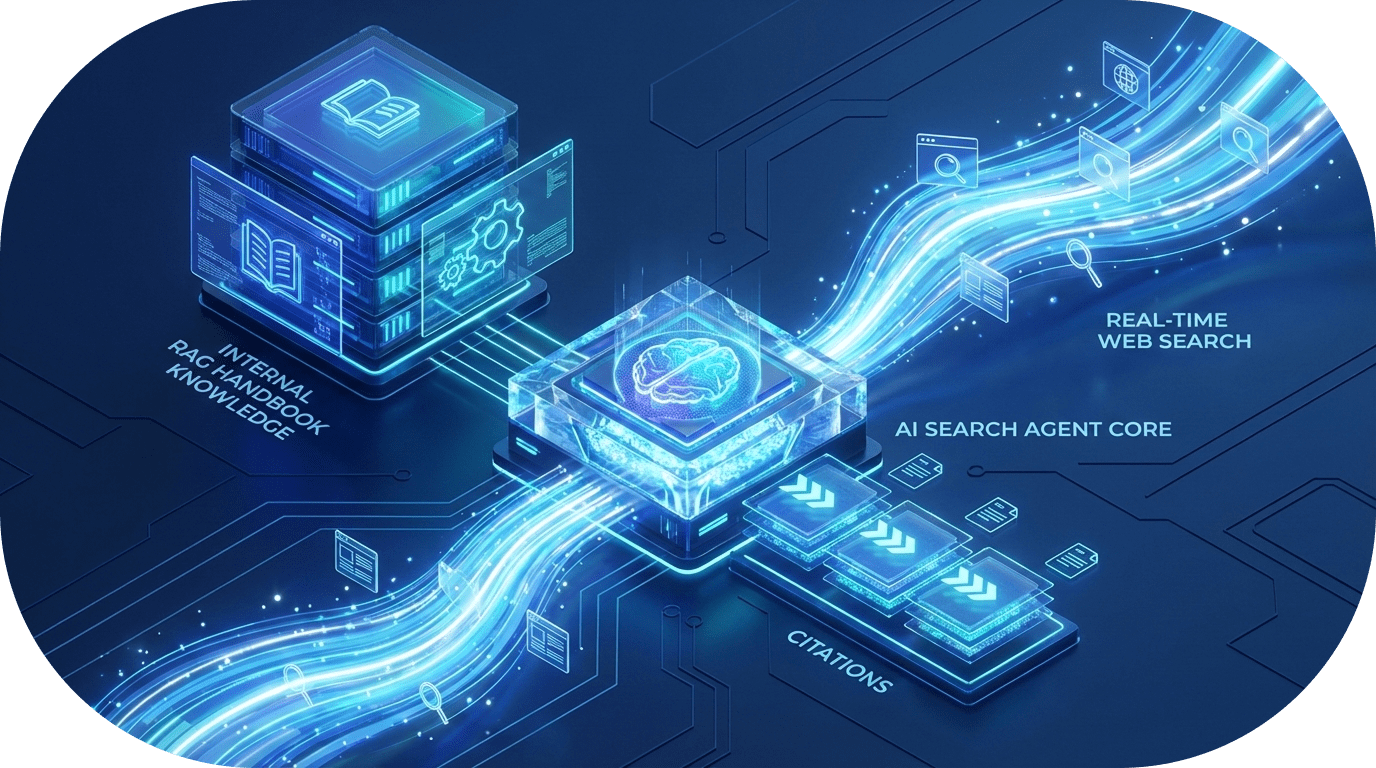

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.