Search Engine APIs vs Scraping: A Practical Overview for Developers

Introduction

Search engines are one of the most valuable data sources on the web. Developers rely on search results for SEO tools, market research, AI assistants, and Retrieval-Augmented Generation pipelines. The challenge is not finding search engines, but choosing the right way to access their data.

Broadly, there are three approaches:

- Official search engine APIs

- Third-party search APIs

- Direct search engine scraping

Each option comes with tradeoffs in cost, complexity, freshness, and reliability.

Official Search Engine APIs

Major search engines provide official APIs that return structured search results.

Common characteristics:

- Stable and well-documented

- Strong rate limits

- Limited result depth

- Higher cost at scale

Typical use cases:

- SEO rank tracking

- Simple search result analysis

- Enterprise applications with strict compliance needs

The main drawback is flexibility. Official APIs usually do not allow deep crawling of result pages or rich content extraction beyond metadata.

Third-Party Search APIs

Third-party providers act as intermediaries between developers and search engines. They handle infrastructure, proxies, and parsing, and expose results through a clean API.

Benefits:

- Easier integration

- Fewer anti-bot issues

- Structured responses

Limitations:

- Pricing can grow quickly with volume

- Often focused only on search results, not page content

- Limited control over output formats

This category is popular for SaaS tools that need search data without maintaining scraping infrastructure.

Direct Search Engine Scraping

Scraping search engines directly gives the most control, but also the highest operational cost.

Pros:

- Full access to raw results

- Custom parsing logic

- No vendor lock-in

Cons:

- Constant maintenance

- Proxy management

- Anti-bot mitigation

- Higher engineering effort

Direct scraping is usually reserved for teams with strong scraping expertise and dedicated infrastructure.

Where Crawleo Fits

Crawleo is designed to sit between traditional APIs and raw scraping.

Instead of returning only search result metadata, Crawleo allows developers to:

- Run real-time search queries

- Automatically crawl result pages

- Receive content in AI-ready formats such as Markdown or clean HTML

Key advantages:

- Real-time data, not cached indexes

- Privacy-first, zero retention design

- Output optimized for AI, RAG, and agents

- No need to manage proxies or scraping logic

This makes Crawleo especially useful for:

- AI assistants that need live web context

- RAG pipelines that require clean page content

- Automation workflows that combine search and crawling

Choosing the Right Approach

The right solution depends on your use case:

- Use official APIs for simple, compliant search access

- Use third-party APIs for convenience and basic search data

- Use Crawleo when you need real-time search combined with clean, crawl-ready content for AI systems

Rather than replacing search engines, Crawleo focuses on making their data easier to use in modern developer workflows.

Final Thoughts

Accessing search engine data is no longer just about links and rankings. Modern applications need context, freshness, and structured content. Understanding the differences between APIs, scraping, and hybrid solutions like Crawleo helps teams choose the most sustainable approach for their product.

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.

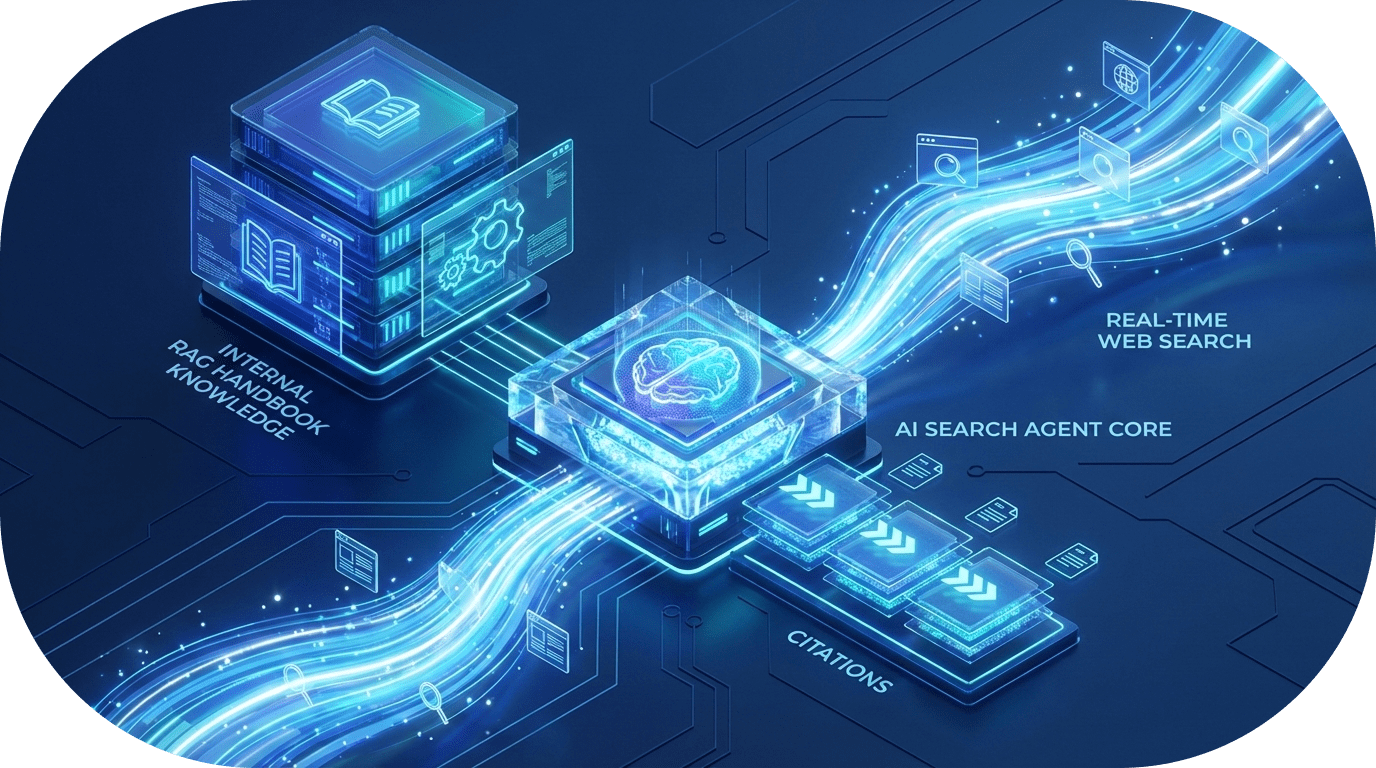

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.