Serper.dev vs Crawleo.dev: Features, Pricing, Pros & Cons (2026)

In this article we’ll compare Serper.dev and Crawleo.dev, two modern APIs aimed at developers building real-time search, crawling, and AI-driven applications. We’ll look at what each service offers, their pricing models from official sources, and the key advantages and disadvantages based on multiple reviews and market coverage.

🧠 What Is Serper.dev?

Serper.dev is a Google Search Results API built to deliver structured search data (web, images, news, maps, places, shopping, videos, etc.) from Google’s search results programmatically with minimal setup and fast performance. It’s designed for developers, SEO tools, dashboards, AI agents, and automation workflows needing reliable real-time search information.

📊 Serper.dev Pricing

Serper offers a usage-based pricing model where you buy credits that don’t require a monthly subscription. Pricing per credit batch typically looks like this:

- 2,500 free queries to start (no credit card required)

- $50 for 50,000 credits (~$1.00 per 1,000 requests)

- Credits volume pricing can drop to around $0.30 per 1,000 requests at higher amounts

This model can be especially cost-effective for high-volume query usage.{index=4}

✅ Pros of Serper.dev

- ⚡ Fast and efficient: ~1–2 second response times.

- 💸 Affordable at scale: Low cost per request, especially for large credit purchases.

- 🧑💻 Developer-friendly: Simple REST API with structured JSON.

- 🆓 Free tier available: Good entry for testing before buying credits.

- 🛠️ Multiple search types supported: Web, images, maps, news, etc.

❌ Cons of Serper.dev

- 📉 Newer and smaller ecosystem: Fewer advanced tools and integrations compared to larger providers like SerpApi.

- 📚 Limited documentation depth compared to mature enterprise APIs.

- 🧩 Credits expire (after months), which can be inconvenient if unused.

🕷️ What Is Crawleo.dev?

Crawleo.dev is a real-time search and crawling API built for AI and automation workflows (RAG pipelines, agents, and intelligent apps) that combines search with deep page crawling, data extraction, and structured outputs. It emphasizes privacy, structured formats, and support for AI contexts.

📊 Crawleo.dev Pricing

Crawleo’s pricing is subscription-based with credit quotas for search and crawl operations. Official pricing (yearly billed) includes:

| Plan | Credits / Month | Price (Yearly) | Remarks |

|---|---|---|---|

| Free Plan | 10K credits | $0 | 1k search requests, 10k crawl pages, email support |

| Developer | 100K credits | $17/mo | Side projects & MVPs |

| Pro | 1M credits | $83/mo | Production use, dedicated support |

| Scale | 3M credits | $167/mo | Large volume use |

Credits are used differently for search vs crawl; e.g., search pages cost more per page.

✅ Pros of Crawleo.dev

- 🕸️ All-in-one search + crawl API: Combines search and deep crawling in one service.

- 🤖 AI-ready outputs: Structured HTML, Markdown, and JSON for RAG and AI workflows.

- 🔒 Privacy first: Zero data retention and secure handling.

- 🏎️ Real-time results: Designed for fast search and extraction.

- 📈 Flexible pricing tiers: From free to high-volume business plans.

❌ Cons of Crawleo.dev

- 💰 Subscription fees may be harder to justify for occasional or low-volume users compared to pure pay-as-you-go models.

- 📊 Credit complexity: Search vs crawl credit use can be confusing at first.

- 🧩 Concurrency limits on lower plans.

🔍 Quick Comparison

| Feature | Serper.dev | Crawleo.dev |

|---|---|---|

| Type | SERP (Google search API) | Web search + crawling API |

| Pricing Model | Pay-per-credit (usage-based) | Subscription with monthly quotas |

| Free Tier | Yes (free credits) | Yes (Free Plan) |

| Best For | Search queries & SEO tools | Deep crawling + structured extraction |

| AI/LLM Integration | Yes, JSON outputs | Yes, optimized for RAG workflows |

| Ease of Use | Simple API focus | More endpoints and options |

Tags

Related Posts

How to Build LLM Training Datasets at Scale with Web Crawling

Training modern large language models requires massive, continuously updated datasets sourced from the open web. This guide explains how AI teams can build scalable, high quality LLM training datasets using web crawling, with practical architecture patterns and best practices.

Rate Limiting, Proxies, and CAPTCHAs: Why DIY Scraping Does Not Scale

Building your own web scraping stack seems simple at first, until rate limits, proxy bans, and CAPTCHAs bring everything to a halt. This article explains why DIY scraping fails at scale and how Crawleo removes these hidden costs for developers and AI teams.

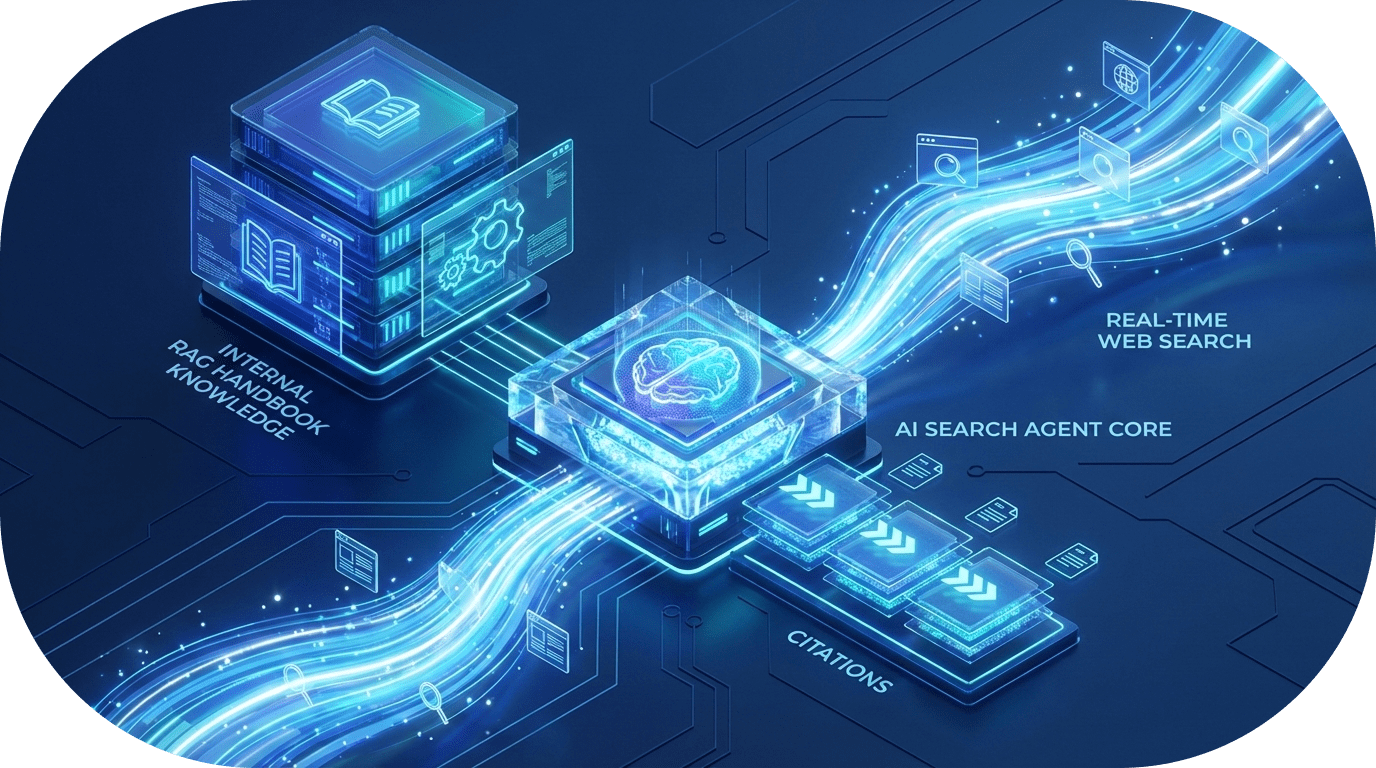

Building Advanced AI Agents: How to Combine RAG with Real-Time Web Search

Learn how to bridge the gap between internal company knowledge and the live web. This guide explores a powerful pattern for building AI agents that can query private handbooks while simultaneously performing filtered real-time web searches to provide the most accurate, cited responses.